Running Langchain and LLM Model on AMD ROCM

System Requirements

- An AMD GPU that supports ROCm (check the compatibility list on docs.amd.com page)

- A Linux-based operating system, preferably Ubuntu 20.04, 22.04

- Conda and Docker environment

- Python 3.6 or higher

ROCm Installation Can Refer to this link

https://rocm.docs.amd.com/en/latest/

Docker Runtime Installation Can Refer to this link

https://docs.docker.com/engine/install/ubuntu/#install-using-the-repository

Conda Environment Installation Can Refer to this link (Inside Container)

https://vegastack.com/tutorials/how-to-install-anaconda-on-ubuntu-22-04/

Current Test System

At this guide I use this following spec :

$ apt show rocm-libs -a

- AMD GPU Instinct MI210

- ROCm 6.0

Package: rocm-libs

Version: 6.0.0.60000-91~20.04

$ lsb_release -a

- Ubuntu 22.04

Distributor ID: Ubuntu

Description: Ubuntu 20.04.6 LTS

Release: 20.04

Codename: focal

$ docker -v

- Docker 25

Docker version 25.0.1, build 29cf629

Using Docker Image for ROCm

Now after ROCm Installed on the Host OS, we can run a container using specific ROCm, Python, and Pytorch Version.

We use -d -it option to keep the Container Running so we can do our task inside.

Change the –shm-size to your specific system memory which this image can use.

sudo docker run -d -it \

--network=host \

--device=/dev/kfd \

--device=/dev/dri \

--ipc=host \

--shm-size 32G \

--group-add=video \

--cap-add=SYS_PTRACE \

--security-opt seccomp=unconfined \

--workdir=/dockerx \

--name=llm-with-langchain-rocm \

-v $HOME/dockerx:/dockerx rocm/pytorch:rocm5.7_ubuntu22.04_py3.10_pytorch_2.0.1 /bin/bashFor other image version and pre-installed software, you can refer the docker hub.

https://hub.docker.com/r/rocm/pytorch/tags

$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3e88bafb5844 rocm/pytorch:rocm5.7_ubuntu22.04_py3.10_pytorch_2.0.1 "/bin/bash" 11 seconds ago Up 11 seconds llm-with-langchain-rocmGo inside the container and Verify Docker Image

$ sudo docker exec -it 3e88bafb5844 /bin/bash

root@amdserver:/dockerx#root@amdserver:/dockerx# conda --version

conda 23.7.4

root@amdserver:/dockerx# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 22.04.3 LTS

Release: 22.04

Codename: jammy

root@amdserver:/dockerx# apt show rocm-libs -a

Package: rocm-libs

Version: 5.7.0.50700-63~22.04

root@amdserver:/dockerx# python --version

Python 3.10.13

Now we create and use conda Environment

conda create --name env_llm

conda init

Re-login console and activate the Environment

conda activate env_llm

(env_llm) root@amdserver:/dockerx# Install basic library for langchain and LLM

pip install langchain openai tiktoken transformers accelerate cohere

**We are using this version at the installation, you can use it if hit the issue with other version.

(env_llm) root@amdserver:/dockerx# python --version

Python 3.10.13

(env_llm) root@amdserver:/dockerx# pip --version

pip 24.0 from /opt/conda/envs/py_3.10/lib/python3.10/site-packages/pip (python 3.10)Langchain LLM Script

Use this sample script to test if it works.

llm.py

##Import Library

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline

from langchain.prompts import PromptTemplate

from langchain_community.llms.huggingface_pipeline import HuggingFacePipeline

from getpass import getpass

import warnings

import torch

import gc

def run_myllm():

##Template prompt for chain

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate.from_template(template)

##Model used, We use Yi-6B since it light weight,, we will try using Mixtral. This model can be found in huggingface website

model_id = "01-ai/Yi-6B"

#model_id = "mistralai/Mixtral-8x7B-Instruct-v0.1"

##Tokenizer

tokenizer = AutoTokenizer.from_pretrained(

model_id

)

##AutoModel

model = AutoModelForCausalLM.from_pretrained(

model_id,

device_map="auto" ##To use CPU only use "-1"

)

##Combine tokenizer and automodel to pipeline

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

repetition_penalty=1.2,

top_p=0.4,

temperature=0.4,

max_new_tokens=1000

)

gpu_llm = HuggingFacePipeline(pipeline=pipe)

##Finally combine them all with prompt

gpu_chain = prompt | gpu_llm

question = "Write a report the life of Thomas Jefferson and a separate report John Hopkins. Each report must be at least 1000 words. The report must be complete. Provide a critique of the report and out line areas of improvements. Based on the critique of the report, rewrite the report based on the critique. Note format the output so that there are no more then 20 words per-line. Do not break words across lines. Complete this task in full"

print(gpu_chain.invoke({"question": question}),end="")

print("\n----------------------\n")

if __name__ == '__main__':

warnings.filterwarnings("ignore")

run_myllm()

This script will take some times to download the model from HuggingFace,

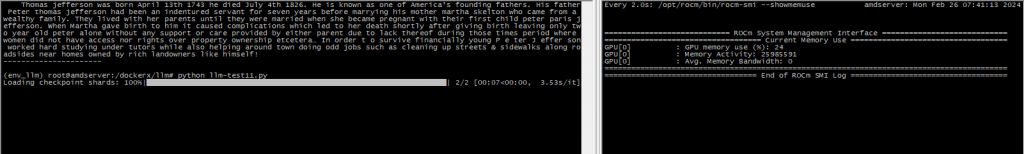

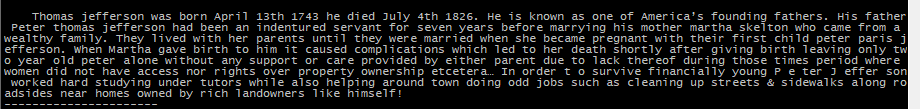

Result:

Quantize large memory model

Generally, big parameter model needs big NV Memory. But if you want to test big model LLM on smal NV Memory GPU, you can quantize it.

The error when we force using big Model in limited GPU memory can be as follows

torch.cuda.OutOfMemoryError: HIP out of memory. Tried to allocate 172.00 MiB (GPU 0; 63.98 GiB total capacity; 12.58 GiB already allocated; 0 bytes free; 12.65 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_HIP_ALLOC_CONFTo check on required memory for specific model, you can use accelerate “estimate-memory” command:

(env_llm) root@amdserver:/dockerx/llm# accelerate estimate-memory 01-ai/Yi-6B

Loading pretrained config for 01-ai/Yi-6B from transformers…

┌────────────────────────────────────────────────────┐

│ Memory Usage for loading |01-ai/Yi-6B├───────┬─────────────┬──────────┬───────────────────┤

│ dtype │Largest Layer│Total Size│Training using Adam│

├───────┼─────────────┼──────────┼───────────────────┤

│float32│ 1000.0 MB │ 21.73 GB │ 86.91 GB │

│float16│ 500.0 MB │ 10.86 GB │ 43.46 GB │

│ int8 │ 250.0 MB │ 5.43 GB │ 21.73 GB │

│ int4 │ 125.0 MB │ 2.72 GB │ 10.86 GB │

└───────┴─────────────┴──────────┴───────────────────┘

*NVMemory required 21.73GB

(env_llm) root@amdserver:/dockerx/llm# accelerate estimate-memory mistralai/Mixtral-8x7B-Instruct-v0.1

Loading pretrained config for mistralai/Mixtral-8x7B-Instruct-v0.1 from transformers…

┌──────────────────────────────────────────────────────────────────────┐

│ Memory Usage for loading |mistralai/Mixtral-8x7B-Instruct-v0.1 ├───────┬─────────────┬──────────┬─────────────────────────────────────┤

│ dtype │Largest Layer│Total Size│ Training using Adam │

├───────┼─────────────┼──────────┼─────────────────────────────────────┤

│float32│ 5.44 GB │174.49 GB │ 697.97 GB │

│float16│ 2.72 GB │ 87.25 GB │ 348.99 GB │

│ int8 │ 1.36 GB │ 43.62 GB │ 174.49 GB │

│ int4 │ 696.02 MB │ 21.81 GB │ 87.25 GB │

└───────┴─────────────┴──────────┴─────────────────────────────────────┘

*NVMemory required 174.49 GBSome of the pros of quantized model are as follows:

- Reduced Memory Footprint

- Faster Inference

- Deployment Flexibility

- Compatibility with Hardware Acceleration

While some of the cons are as follows:

- Decreased Model Accuracy

- Quantized models have a limited dynamic range due to the reduced number of available values to represent numbers.

- Quantization introduces quantization error, which is the discrepancy between the original floating-point values and their quantized representations.

- Training quantized LLM models can be more challenging compared to full-precision models.

- Developing and optimizing quantized LLM models can be more complex and time-consuming compared to full-precision models.

Generally we can use bitsandbytes python library to do quantize, but since it required CUDA we can not do it on our Hardware.

But there is new alternative with bitsandbytes for rocm here:

https://git.ecker.tech/mrq/bitsandbytes-rocm

We will try it, first clone it:

git clone https://git.ecker.tech/mrq/bitsandbytes-rocm.gitCompile and Install it inside Conda Environment

cd bitsandbytes

make hip ROCM_TARGET=gfx90a

pip install .

For alternatives devices, for ROCM_TARGET=gfx… you can refer this link:

https://www.llvm.org/docs/AMDGPUUsage.html#processors

Verify it

python -m bitsandbytes

COMPILED_WITH_CUDA = True

COMPUTE_CAPABILITIES_PER_GPU = ['9.0']

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

++++++++++++++++++++++ DEBUG INFO END ++++++++++++++++++++++

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Running a quick check that:

+ library is importable

+ CUDA function is callable

WARNING: Please be sure to sanitize sensible info from any such env vars!

SUCCESS!

Installation was successful!Do bitsandbyte quantization script :

Alternatively for the Model Quantization (Reducing Model Memory Use), we can use autogptq.

https://github.com/AutoGPTQ/AutoGPTQ

https://huggingface.github.io/autogptq-index/whl/rocm573/

Install autogptq library

pip install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/rocm573/