RDMA, ROCE test in Network with MPI Tools

In the need for AI network infrastructure, an ROCE test in the network fabric is needed to ensure that the ongoing traffic is always in the low latency condition and get the best high bandwidth in the future.

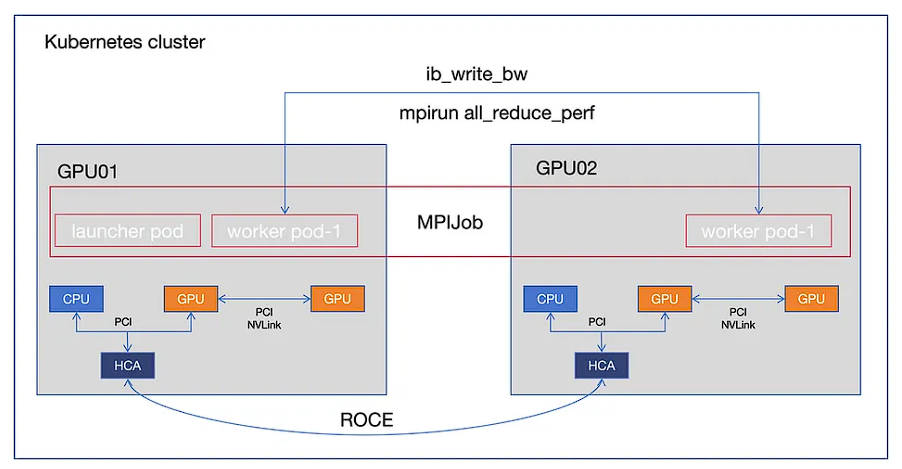

In this section, we talk about how to test the ROCE network in the Kubernetes cluster premise.

There is 3 step to test the ROCE network in the kubernetes:

- Build a tool container image

We need tools like: – RDMA perftest tool project, which provides ib_write|read|send_bw|lat tools

– some tools from Mellanox HCA driver package, such as show_gids

For the tools above, we can get them in distribution format in the Mellanox driver package.

$ cd ~/MLNX_OFED_LINUX-23.07-0.5.1.2-ubuntu20.04-x86_64/DEBS

$ ls -l | grep -E "openmpi|perftest|mpitest|iproute2|mlnx-tools"

-rw-r--r-- 1 clouduser clouduser 957268 Aug 24 17:02 mlnx-iproute2_6.3.0-1.2307050_amd64.deb

-rw-r--r-- 1 clouduser clouduser 69500 Aug 24 15:38 mlnx-tools_23.07-0.2307050_amd64.deb

-rw-r--r-- 1 clouduser clouduser 400172 Aug 24 16:42 mpitests_3.2.20-de56b6b.2307050_amd64.deb

-rw-r--r-- 1 clouduser clouduser 19481020 Aug 24 16:40 openmpi_4.1.5rc2-1.2307050_all.deb

-rw-r--r-- 1 clouduser clouduser 271036 Aug 24 15:54 perftest_23.07.0-0.25.g149fbd6.2307050_amd64.deb

$ dpkg-deb -c perftest_23.07.0-0.25.g149fbd6.2307050_amd64.deb | grep bin/ib_

-rwxr-xr-x root/root 248104 2015-03-29 08:58 ./usr/bin/ib_atomic_bw

-rwxr-xr-x root/root 239912 2015-03-29 08:58 ./usr/bin/ib_atomic_lat

-rwxr-xr-x root/root 248104 2015-03-29 08:58 ./usr/bin/ib_read_bw

-rwxr-xr-x root/root 239912 2015-03-29 08:58 ./usr/bin/ib_read_lat

-rwxr-xr-x root/root 252200 2015-03-29 08:58 ./usr/bin/ib_send_bw

-rwxr-xr-x root/root 248104 2015-03-29 08:58 ./usr/bin/ib_send_lat

-rwxr-xr-x root/root 248104 2015-03-29 08:58 ./usr/bin/ib_write_bw

-rwxr-xr-x root/root 239912 2015-03-29 08:58 ./usr/bin/ib_write_latAnd now we need the MPI enabled nccl test and compile it to our kubernetes environment.

FROM nvcr.io/nvidia/nemo:23.06

# The base image has already nccl-test commands and mpirun command but nccl-test is not compiled with mpi enabled.

WORKDIR /workspace

# copy all tools

COPY . .

# ****** Step1: install tools from Mellanox packages

# install some dependency packages

RUN apt-get update && apt-get install libpci3 libmnl0 libelf1 pciutils openssh-server iputils-ping -y --no-install-recommends && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

# Install the tools from Mellanox packages

# install mlnx iproute2 with /opt/mellanox/iproute2/sbin/ip command

# install ib_read/write_lat/bw commands

# install mpi test(osu* commands)

RUN dpkg -i *.deb && rm -f *.deb

ENV PATH="${PATH}:/opt/mellanox/iproute2/sbin"

# ****** Step2: compile nccl-tests tools with MPI enabled

# build mpi enabled nccl test commands at: /workspace/nccl-tests/build

RUN cd nccl-tests && make MPI=1 MPI_HOME=/opt/hpcx/ompi/

# ****** Step3: set up sshd environment for mpirun

# mpi-operator mounts the .ssh folder from a Secret. For that to work, we need

# to disable UserKnownHostsFile to avoid write permissions.

# Disabling StrictModes avoids directory and files read permission checks.

RUN echo " UserKnownHostsFile /dev/null" >> /etc/ssh/ssh_config && echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

sed -i 's/#\(StrictModes \).*/\1no/g' /etc/ssh/sshd_config

RUN mkdir /run/sshd In above dockerfile, the first and second step is for install and compile tools. The third step is set up ssh environment for mpirun command under MPI operator framework.

To build the image:

$ docker build . --tag gpu02:5000/ea-bignlp/ga-participants/nemofw-training-ray-rdma-tools:23.08.02-gy - Install the MPIOperator

We use the MPIOperator to set up the testing fabric. To deploy the operator we use:

$ kubectl apply -f https://raw.githubusercontent.com/kubeflow/mpi-operator/v0.4.0/deploy/v2beta1/mpi-operator.yaml - Start a testing fabric

Testing fabric is MPIJob, consists one launcher pod and some worker pods. To start the MPIJob use:

$ kubectl apply -f mpi-antiaffinity.yaml

$ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mpi-affi-test-launcher-kdx6f 1/1 Running 0 78m 172.25.69.178 gpu01 <none> <none>

mpi-affi-test-worker-0 1/1 Running 0 78m 172.25.69.177 gpu01 <none> <none>

mpi-affi-test-worker-1 1/1 Running 0 78m 172.25.69.179 gpu01 <none> <none> - Run ROCE Test

Run Write Test Bandwidth between 2 worker pods

#*THIS IS SERVER SIDE*#

$ kubectl exec -ti mpi-affi-test-worker-0 -- bash

# ib_write_bw --report_gbits -x $(cat /etc/nccl.conf | cut -d"=" -f2)

************************************

* Waiting for client to connect... *

************************************

---------------------------------------------------------------------------------------

RDMA_Write BW Test

Dual-port : OFF Device : mlx5_0

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : ON

CQ Moderation : 1

Mtu : 4096[B]

Link type : Ethernet

GID index : 4

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0000 QPN 0x148b PSN 0xceed8e RKey 0x205900 VAddr 0x007f9c72884000

GID: 00:00:00:00:00:00:00:00:00:00:255:255:192:168:02:02

remote address: LID 0000 QPN 0x148d PSN 0x76d776 RKey 0x205a00 VAddr 0x007f37364ce000

GID: 00:00:00:00:00:00:00:00:00:00:255:255:192:168:02:03

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[Gb/sec] BW average[Gb/sec] MsgRate[Mpps]

65536 5000 11531.98 11531.66 0.184507

--------------------------------------------------------------------------------------- #*THIS IS CLIENT SIDE*#

$ kubectl exec -ti mpi-affi-test-worker-1 -- bash

# ib_write_bw 192.168.2.2 --report_gbits -x $(cat /etc/nccl.conf | cut -d"=" -f2)

---------------------------------------------------------------------------------------

RDMA_Write BW Test

Dual-port : OFF Device : mlx5_0

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : ON

TX depth : 128

CQ Moderation : 1

Mtu : 4096[B]

Link type : Ethernet

GID index : 8

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0000 QPN 0x1493 PSN 0xcbf86a RKey 0x205900 VAddr 0x007f42e7e8a000

GID: 00:00:00:00:00:00:00:00:00:00:255:255:192:168:02:03

remote address: LID 0000 QPN 0x1494 PSN 0xce4fbe RKey 0x205a00 VAddr 0x007fdd0d213000

GID: 00:00:00:00:00:00:00:00:00:00:255:255:192:168:02:02

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[Gb/sec] BW average[Gb/sec] MsgRate[Mpps]

Conflicting CPU frequency values detected: 900.000000 != 3300.000000. CPU Frequency is not max.

65536 5000 107.84 107.84 0.205690

---------------------------------------------------------------------------------------- Run mpi nccl test

And finally, we can run the test with MPI on 2 processes on multiple nodes with 4 GPUs each (8 in total)

$ kubectl exec -ti mpi-affi-test-launcher-kdx6f -- mpirun --allow-run-as-root --map-by node -np 2 -x PATH -x NCCL_DEBUG=WARN ./nccl-tests/build/all_reduce_ perf -b 8 -e 128M -f 2 -g 4

Warning: Permanently added 'mpi-affi-test-worker-1.mpi-affi-test-worker.default.svc' (ED25519) to the list of known hosts.

Warning: Permanently added 'mpi-affi-test-worker-0.mpi-affi-test-worker.default.svc' (ED25519) to the list of known hosts.

# nThread 1 nGpus 4 minBytes 8 maxBytes 134217728 step: 2(factor) warmup iters: 5 iters: 20 agg iters: 1 validation: 1 graph: 0

#

# Using devices

# Rank 0 Group 0 Pid 481 on mpi-affi-test-worker-0 device 0 [0x9c] NVIDIA A40

# Rank 1 Group 0 Pid 481 on mpi-affi-test-worker-0 device 1 [0x9d] NVIDIA A40

# Rank 2 Group 0 Pid 481 on mpi-affi-test-worker-0 device 2 [0xa0] NVIDIA A40

# Rank 3 Group 0 Pid 481 on mpi-affi-test-worker-0 device 3 [0xa4] NVIDIA A40

# Rank 4 Group 0 Pid 479 on mpi-affi-test-worker-1 device 0 [0x35] NVIDIA A40

# Rank 5 Group 0 Pid 479 on mpi-affi-test-worker-1 device 1 [0x36] NVIDIA A40

# Rank 6 Group 0 Pid 479 on mpi-affi-test-worker-1 device 2 [0x39] NVIDIA A40

# Rank 7 Group 0 Pid 479 on mpi-affi-test-worker-1 device 3 [0x3d] NVIDIA A40

NCCL version 2.18.3+cuda12.2

#

# out-of-place in-place

# size count type redop root time algbw busbw #wrong time algbw busbw #wrong

# (B) (elements) (us) (GB/s) (GB/s) (us) (GB/s) (GB/s)

8 2 float sum -1 19.72 0.00 0.00 0 19.44 0.00 0.00 0

16 4 float sum -1 20.03 0.00 0.00 0 19.46 0.00 0.00 0

32 8 float sum -1 19.79 0.00 0.00 0 19.43 0.00 0.00 0

64 16 float sum -1 20.28 0.00 0.01 0 20.24 0.00 0.01 0

128 32 float sum -1 21.17 0.01 0.01 0 20.72 0.01 0.01 0

256 64 float sum -1 20.84 0.01 0.02 0 20.87 0.01 0.02 0

512 128 float sum -1 22.66 0.02 0.04 0 22.27 0.02 0.04 0

1024 256 float sum -1 24.04 0.04 0.07 0 23.80 0.04 0.08 0

2048 512 float sum -1 27.08 0.08 0.13 0 26.74 0.08 0.13 0

4096 1024 float sum -1 29.44 0.14 0.24 0 29.08 0.14 0.25 0

8192 2048 float sum -1 31.49 0.26 0.46 0 30.59 0.27 0.47 0

16384 4096 float sum -1 40.77 0.40 0.70 0 40.94 0.40 0.70 0

32768 8192 float sum -1 57.02 0.57 1.01 0 57.29 0.57 1.00 0

65536 16384 float sum -1 87.10 0.75 1.32 0 88.36 0.74 1.30 0

131072 32768 float sum -1 96.20 1.36 2.38 0 94.14 1.39 2.44 0

262144 65536 float sum -1 119.1 2.20 3.85 0 117.5 2.23 3.90 0

524288 131072 float sum -1 158.9 3.30 5.78 0 158.6 3.30 5.78 0

1048576 262144 float sum -1 230.7 4.54 7.95 0 229.7 4.56 7.99 0

2097152 524288 float sum -1 378.3 5.54 9.70 0 377.0 5.56 9.73 0

4194304 1048576 float sum -1 699.7 5.99 10.49 0 696.3 6.02 10.54 0

8388608 2097152 float sum -1 1283.8 6.53 11.43 0 1285.2 6.53 11.42 0

16777216 4194304 float sum -1 2522.8 6.65 11.64 0 2525.0 6.64 11.63 0

33554432 8388608 float sum -1 5094.7 6.59 11.53 0 5088.9 6.59 11.54 0

67108864 16777216 float sum -1 9958.5 6.74 11.79 0 9943.2 6.75 11.81 0

134217728 33554432 float sum -1 19668 6.82 11.94 0 19647 6.83 11.96 0

# Out of bounds values : 0 OK

# Avg bus bandwidth : 4.10502

#For more details about the arguments of command to run the test with MPI, please have a look at the mpirun nccl test.

Reference: